It doesn't seem like a lot of saliva, but it took some work to reach the fill line. Ready to go!

Pessimism of the Intellect, Optimism of the Will Favorite posts | Manifold podcast | Twitter: @hsu_steve

Thursday, May 31, 2012

Do not eat this kit

It doesn't seem like a lot of saliva, but it took some work to reach the fill line. Ready to go!

Sunday, May 27, 2012

Prometheus

You'll also notice some Blade Runner influences -- Ridley Scott directed Alien, Blade Runner and now Prometheus.

Trailer:

Teasers:

Saturday, May 26, 2012

Algo vs Algo and the Facebook IPO

Do we need to impose a small random delay on all transactions, or possibly a small transaction tax?

Confused? See here :-)Did a Stuck Quote Prevent a Facebook Opening Day Pop?On 18-May-2012, within seconds of theopening in Facebook, we noticed an exceptional occurrence: Nasdaq quotes had higher bid prices than ask prices. This is called a cross market and occurs frequently between two different exchanges, but practically never on the same exchange (the buyer just needs to match up with the seller, which is fundamentally what an exchange does).When Nasdaq's ask price dropped below its bid price, the quote was marked non-firm -- indicating something is wrong with it, and for software to exclude it from any best bid/offer calculations. However, in several of the earlier occurrences the first non-firm crossed quote was immediately preceded by a regular or firm crossed quote!During the immediate period of time when the Nasdaq quote went from normal to non-firm, you can see an immediate evaporation in quotes from other exchanges, often accompanied by a flurry of trades. We first noticed this behavior while making a a video we made of quotes during the opening period in Facebook trading.The reaction to the crossed quote often resulted in the spread to widen from 1 cents to 70 cents or more in 1/10th of a second! It is important to realize that algorithms (algos) which are based on speed use existing prices (orders) from other exchanges as their primary (if not sole) input. So it is quite conceivable, if not highly likely that these unusual, and rare inverted quotes coming from Nasdaq influenced algorithms running on other exchanges.It is now more than a curiosity that the market was unable to penetrate Nasdaq's crossed $42.99 bid which appeared within 30 second of the open and remained stuck until 13:50. Could this have prevented the often expected pop (increase) in an IPO's stock price for FaceBook?This also brings another example of the dangers of placing a blind, mindless emphasis on speed above everything else. Algos reacting to prices created by other algos reacting to prices created by still other algos. Somewhere along the way, it has to start with a price based on economic reality. But the algos at the bottom of the intelligence chain can't waste precious milliseconds for that.They are built to simply react faster than the other guys algos. Why? Because the other guy figured out how to go faster! We don't need this in our markets. We need more intelligence. The economic and psychological costs stemming from Facebook not getting the traditional opening day pop are impossible to measure. That it may have been caused by algos reacting to a stuck quote from one exchange is not, sadly, surprising anymore.

Friday, May 25, 2012

The common app

A short science fiction story by one of my collaborators :-)

The common app

Robert Scherrer Nature 485, 540 (24 May 2012)

... Vanessa set her drink down on the bar. “Eastern Southwest State's a perfectly good school, Anne. And if Larry doesn't get in, they have a northern branch campus that would certainly take him.” Vanessa glanced over at George. “Our Chester will be going to Harvard, just like his father.”

“But what if he doesn't get in?” asked Anne.

Vanessa shot Anne a poisonous look. “Oh, we've made alternate plans. Princeton is an excellent back-up choice.” Vanessa smoothed back her peroxide hair. “Now, George, it's almost time. Be a sweetheart and turn on the wall screen.”

... But back in the old days, you had to fill out an application for college — not like now, where you just send in a cheek swab. ...

How long before this comes true?

Thursday, May 24, 2012

Motor City airport

Monday, May 21, 2012

Quants at the SEC

This NYTimes article reports that things have improved a bit recently there.

NYTimes: ... Embarrassed after missing the warning signs of the financial crisis and the Ponzi scheme of Bernard L. Madoff, the agency’s enforcement division has adopted several new — if somewhat unconventional — strategies to restore its credibility. The S.E.C. is taking its cue from criminal authorities, studying statistical formulas to trace connections, creating a powerful unit to cull tips and assign cases and even striking a deal with the Federal Bureau of Investigation to have agents embedded with the regulator.

... Mr. Sporkin has built a team of more than 40 former traders, exchange experts, accountants and securities lawyers to sift through roughly 200 pieces of intelligence a day, distilling the hottest tips into a daily “intelligence report.” “It’s the central intelligence office for the whole agency,” Mr. Sporkin said.

The overhaul came with an upgrade in technology. The hub of Mr. Sporkin’s outfit is a “market watch room,” replete with Bloomberg terminals and real-time stock pricing monitors that keep an eye on the markets.

... Rather than examining questionable trades in specific stocks, Mr. Hawke and his team now analyze suspicious traders and their network of connections on Wall Street. The investigators have turned to statistics, using tools like “cluster analysis” and “fuzzy matching,” to identify relationships and trading patterns that sometimes go undetected.

Friday, May 18, 2012

Five years of GWAS discovery

Part of the perception problem is that some biologists had anticipated that many conditions (phenotypes or disease susceptibility factors) would be controlled by small numbers of (Mendelian) genes. In my opinion, anyone with a decent understanding of complexity would have found this prior highly implausible. There is another possibility for why some researchers pushed the Mendelian scenario, perhaps without fully believing in it: drug discovery and big, rapid breakthroughs are more likely under that assumption. In any case, what has been discovered is what I anticipated: many genes, each of small effect, control each phenotype. This is no reason for despair (well, perhaps it is if your main interest is drug discovery) -- incredible science is right around the corner as costs decrease and sample sizes continue to grow. Luckily, much of the genetic variance is linear, or additive, so can be understood using relatively simple mathematics.

In the figure, note that there were no hits (SNP associations) for height until sample size of close to 20,000 individuals, but progress has been rapid as sample sizes continue to grow. We are only now approaching this level of statistical power for IQ.

Five Years of GWAS Discovery: ... The Cost of GWASs: If we assume that the GWAS results from Figure 1 represent a total of 500,000 SNP chips and that on average a chip costs $500, then this is a total investment of $250 million. If there are a total of ~2,000 loci detected across all traits, then this implies an investment of $125,000 per discovered locus. Is that a good investment? We think so: The total amount of money spent on candidate-gene studies and linkage analyses in the 1990s and 2000s probably exceeds $250M, and they in total have had little to show for it. Also, it is worthwhile to put these amounts in context. $250M is of the order of the cost of a one-two stealth fighter jets and much less than the cost of a single navy submarine. It is a fraction of the ~$9 billion cost of the Large Hadron Collider. It would also pay for about 100 R01 grants. Would those 100 non-funded R01 grants have made breakthrough discoveries in biology and medicine? We simply can’t answer this question, but we can conclude that a tremendous number of genuinely new discoveries have been made in a period of only five years.

... The combination of large sample sizes and stringent significance testing has led to a large number of robust and replicable associations between complex traits and genetic variants, many of which are in meaningful biological pathways. A number of variants or different variants at the same loci have been shown to be associated with the same trait in different ethnic populations, and some loci are even replicated across species.81 The combination of multiple variants with small effect sizes has been shown to predict disease status or phenotype in independent samples from the same population. Clearly, these results are not consistent with flawed inferences from GWASs.

... In conclusion, in a period of less than five years, the GWAS experimental design in human populations has led to new discoveries about genes and pathways involved in common diseases and other complex traits, has provided a wealth of new biological insights, has led to discoveries with direct clinical utility, and has facilitated basic research in human genetics and genomics. For the future, technological advances enabling the sequencing of entire genomes in large samples at affordable prices is likely to generate additional genes, pathways, and biological insights, as well as to identify causal mutations.

What was once science fiction will soon be reality.

Long ago I sketched out a science fiction story involving two Junior Fellows, one a bioengineer (a former physicist, building the next generation of sequencing machines) and the other a mathematician. The latter, an eccentric, was known for collecting signatures -- signed copies of papers and books authored by visiting geniuses (Nobelists, Fields Medalists, Turing Award winners) attending the Society's Monday dinners. He would present each luminary with an ornate (strangely sticky) fountain pen and a copy of the object to be signed. Little did anyone suspect the real purpose: collecting DNA samples to be turned over to his friend for sequencing! The mathematician is later found dead under strange circumstances. Perhaps he knew too much! ...

Wednesday, May 16, 2012

Eurodammerung

NYTimes: Some of us have been talking it over, and here’s what we think the end game looks like:

1. Greek euro exit, very possibly next month.

2. Huge withdrawals from Spanish and Italian banks, as depositors try to move their money to Germany.

3a. Maybe, just possibly, de facto controls, with banks forbidden to transfer deposits out of country and limits on cash withdrawals.

3b. Alternatively, or maybe in tandem, huge draws on ECB credit to keep the banks from collapsing.

4a. Germany has a choice. Accept huge indirect public claims on Italy and Spain, plus a drastic revision of strategy — basically, to give Spain in particular any hope you need both guarantees on its debt to hold borrowing costs down and a higher eurozone inflation target to make relative price adjustment possible; or:

4b. End of the euro.

And we’re talking about months, not years, for this to play out.

Tuesday, May 15, 2012

Modeling gluttony

(academic science) --> (Wall St.) --> (academic science) --> (Wall St.) --> (academic science)

(Notice the yo-yo pattern? ;-)

NYTimes: You are an M.I.T.-trained mathematician and physicist. How did you come to work on obesity? In 2004, while on the faculty of the math department at the University of Pittsburgh, I married. My wife is a Johns Hopkins ophthalmologist, and she would not move. So I began looking for work in the Beltway area. Through the grapevine, I heard that the N.I.D.D.K., a branch of the National Institutes of Health, was building up its mathematics laboratory to study obesity. At the time, I knew almost nothing of obesity.

I didn’t even know what a calorie was. I quickly read every scientific paper I could get my hands on.

I could see the facts on the epidemic were quite astounding. Between 1975 and 2005, the average weight of Americans had increased by about 20 pounds. Since the 1970s, the national obesity rate had jumped from around 20 percent to over 30 percent.

Monday, May 14, 2012

Stanford and Silicon Valley

NewYorker: ... If the Ivy League was the breeding ground for the élites of the American Century, Stanford is the farm system for Silicon Valley. When looking for engineers, Schmidt said, Google starts at Stanford. Five per cent of Google employees are Stanford graduates. The president of Stanford, John L. Hennessy, is a director of Google; he is also a director of Cisco Systems and a successful former entrepreneur. Stanford’s Office of Technology Licensing has licensed eight thousand campus-inspired inventions, and has generated $1.3 billion in royalties for the university. Stanford’s public-relations arm proclaims that five thousand companies “trace their origins to Stanford ideas or to Stanford faculty and students.” They include Hewlett-Packard, Yahoo, Cisco Systems, Sun Microsystems, eBay, Netflix, Electronic Arts, Intuit, Fairchild Semiconductor, Agilent Technologies, Silicon Graphics, LinkedIn, and E*Trade.... There are probably more faculty millionaires at Stanford than at any other university in the world. Hennessy earned six hundred and seventy-one thousand dollars in salary from Stanford last year, but he has made far more as a board member of and shareholder in Google and Cisco.

Very often, the wealth created by Stanford’s faculty and students flows back to the school. Hennessy is among the foremost fund-raisers in America. In his twelve years as president, Stanford’s endowment has grown to nearly seventeen billion dollars. In each of the past seven years, Stanford has raised more money than any other American university.

... A quarter of all undergraduates and more than fifty per cent of graduate students are engineering majors. At Harvard, the figures are four and ten per cent; at Yale, they’re five and eight per cent.

Wednesday, May 09, 2012

Entanglement and Decoherence

Buniy and Hsu also seem to be confused about the topics that have been covered hundreds of times on this blog. In particular, the right interpretation of the state is a subjective one. Consequently, all the properties of a state – e.g. its being entangled – are subjective as well. They depend on what the observer just knows at a given moment. Once he knows the detailed state of objects or observables, their previous entanglement becomes irrelevant.

... When I read papers such as one by Buniy and Hsu, I constantly see the wrong assumption written everything in between the lines – and sometimes inside the lines – that the wave function is an objective wave and one may objectively discuss its properties. Moreover, they really deny that the state vector should be updated when an observable is changed. But that's exactly what you should do. The state vector is a collection of complex numbers that describe the probabilistic knowledge about a physical system available to an observer and when the observer measures an observable, the state instantly changes because the state is his knowledge and the knowledge changes!In the section of our paper on Schmidt decomposition, we write

A measurement of subsystem A which determines it to be in state ψ^(n)_A implies that the rest of the universe must be in state ψ^(n)_B. For example, A might consist of a few spins [9]; it is interesting, and perhaps unexpected, that a measurement of these spins places the rest of the universe into a particular state ψ^(n)_B. As we will see below, in the cosmological context these modes are spread throughout the universe, mostly beyond our horizon. Because we do not have access to these modes, they do not necessarily prevent us from detecting A in a superposition of two or more of the ψ^(n)_A. However, if we had sufficient access to B degrees of freedom (for example, if the relevant information differentiating between ψ^(n)_A states is readily accessible in our local environment or in our memory records), then the A system would decohere into one of the ψ^(n)_A.This discussion makes it clear that ψ describes all possible branches of the wavefunction, including those that may have already decohered from each other: it describes not just the subjective experience of one observer, but of all possible observers. If we insist on removing decohered branches from the wavefunction (e.g., via collapse or von Neumann projection), then much of the entanglement we discuss in the paper is also excised. However, if we only remove branches that are inconsistent with the observations of a specific single observer, most of it will remain. Note decoherence is a continuous and (in principle) reversible phenomenon, so (at least within a unitary framework) there is no point at which one can say two outcomes have entirely decohered -- one can merely cite the smallness of overlap between the two branches or the level of improbability of interference between them.

I don't think Lubos disagrees with the mathematical statements we make about the entanglement properties of ψ. He may claim that these entanglement properties are not subject to experimental test. At least in principle, one can test whether systems A and B, which are in two different horizon volumes at cosmological time t1, are entangled. We have to wait until some later time t2, when there has been enough time for classical communication between A and B, but otherwise the protocol for determining entanglement is the usual one.

If we leave aside cosmology and consider, for example, the atoms or photons in a box, the same formalism we employ shows that there is likely to be widespread entanglement among the particles. In principle, an experimentalist who is outside the box can test whether the state ψ describing the box is "typical" (i.e., highly entangled) by making very precise measurements.

See stackexchange for more discussion.

Tuesday, May 08, 2012

Everything is Entangled

http://arxiv.org/abs/1205.1584

Everything is Entangled

Roman V. Buniy, Stephen D.H. Hsu

We show that big bang cosmology implies a high degree of entanglement of particles in the universe. In fact, a typical particle is entangled with many particles far outside our horizon. However, the entanglement is spread nearly uniformly so that two randomly chosen particles are unlikely to be directly entangled with each other -- the reduced density matrix describing any pair is likely to be separable.From the introduction:

Ergodicity and properties of typical pure states

When two particles interact, their quantum states generally become entangled. Further interaction with other particles spreads the entanglement far and wide. Subsequent local manipulations of separated particles cannot, in the absence of quantum communication, undo the entanglement. We know from big bang cosmology that our universe was in thermal equilibrium at early times, and we believe, due to the uniformity of the cosmic microwave background, that regions which today are out of causal contact were once in equilibrium with each other. Below we show that these simple observations allow us to characterize many aspects of cosmological entanglement.

We will utilize the properties of typical pure states in quantum mechanics. These are states which dominate the Hilbert measure. The ergodic theorem proved by von Neumann implies that under Schrodinger evolution most systems spend almost all their time in typical states. Indeed, systems in thermal equilibrium have nearly maximal entropy and hence must be typical. Typical states are maximally entangled (see below) and the approach to equilibrium can be thought of in terms of the spread of entanglement. ...

Professor Buniy in action! (Working on this research.)

The truth about venture capital

EXECUTIVE SUMMARY

Venture capital (VC) has delivered poor returns for more than a decade. VC returns haven’t significantly outperformed the public market since the late 1990s, and, since 1997, less cash has been returned to investors than has been invested in VC. Speculation among industry insiders is that the VC model is broken, despite occasional high-profile successes like Groupon, Zynga, LinkedIn, and Facebook in recent years.

The Kauffman Foundation investment team analyzed our twenty-year history of venture investing experience in nearly 100 VC funds with some of the most notable and exclusive partnership “brands” and concluded that the Limited Partner (LP) investment model is broken. Limited Partners—foundations, endowments, and state pension fund—invest too much capital in underperforming venture capital funds on frequently mis-aligned terms.

Our research suggests that investors like us succumb time and again to narrative fallacies, a well-studied behavioral finance bias. We found in our own portfolio that:

Only twenty of 100 venture funds generated returns that beat a public-market equivalent by more than 3 percent annually, and half of those began investing prior to 1995.

The majority of funds—sixty-two out of 100—failed to exceed returns available from the public markets, after fees and carry were paid.

There is not consistent evidence of a J-curve in venture investing since 1997; the typical Kauffman Foundation venture fund reported peak internal rates of return (IRRs) and investment multiples early in a fund’s life (while still in the typical sixty-month investment period), followed by serial fundraising in month twenty-seven.

Only four of thirty venture capital funds with committed capital of more than $400 million delivered returns better than those available from a publicly traded small cap common stock index.

Of eighty-eight venture funds in our sample, sixty-six failed to deliver expected venture rates of return in the first twenty-seven months (prior to serial fundraises). The cumulative effect of fees, carry, and the uneven nature of venture investing ultimately left us with sixty-nine funds (78 percent) that did not achieve returns sufficient to reward us for patient, expensive, longterm investing.

Investment committees and trustees should shoulder blame for the broken LP investment model, as they have created the conditions for the chronic misallocation of capital. ...See earlier post How to run a hedge fund.

Monday, May 07, 2012

NRC physics ranking by research output

In the list below I would say MIT and Stanford deserve higher ratings, and UO is (alas) ranked higher than it deserves. Once you get beyond the top 10 or so physics departments, there are many good programs across the country, and it's hard to differentiate between them.

Saturday, May 05, 2012

Exceptional Cognitive Ability: The Phenotype

See also related posts.

There does not appear to be an ‘‘ability threshold’’ (i.e., a point at which, say, beyond an IQ of 115 or 120, more ability does not matter). Although other things like ambition and opportunity clearly matter, more ability is better. The data also suggest the importance of going beyond general ability level when characterizing exceptional phenotypes, because specific abilities add nuance to predictions across different domains of talent development. Differential ability pattern, in this case verbal relative to mathematical ability and vice versa, are differentially related to accomplishments that draw on different intellectual strengths. Exceptional cognitive abilities do appear to be involved in creative expression, or ‘‘abstract noegenesis’’ (Spearman and Jones, 1950). That these abilities are readily detectable at age 12 is especially noteworthy.

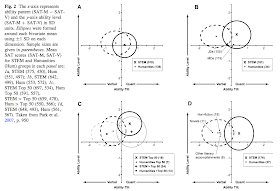

This figure, describing the same study population, may also be of interest:

Earlier discussion:

Scores are normalized in units of SDs. The vertical axis is V, the horizontal axis is M, and the length of the arrow reflects spatial ability: pointing to the right means above the group average, to the left means below average; note the arrow for business majors should be twice as long as indicated but there was not enough space on the diagram. The spatial score is obviously correlated with the M score. Upper right = high V, high M (e.g., physical science) Upper left = high V, lower M (e.g., humanities, social science) Lower left = lower V, lower M (e.g., business, law) Lower right = lower V, high M (e.g., math, engineering, CS)

Thursday, May 03, 2012

Jensen on g and genius

Mega Questions for Renowned Psychologist Dr._Arthur R. Jensen - Interview by Christopher Michael Langan and Dr. Gina LoSasso and members of the Mega Foundation, Mega Society East and Ultranet

Question #1:

Christopher Langan for the Mega Foundation: It is reported that one of this century’s greatest physicists, Nobelist Richard Feynman, had an IQ of 125 or so. Yet, a careful reading of his work reveals amazing powers of concentration and analysis…powers of thought far in excess of those suggested by a z score of well under two standard deviations above the population mean. Could this be evidence that something might be wrong with the way intelligence is tested? Could it mean that early crystallization of intelligence, or specialization of intelligence in a specific set of (sub-g) factors – i.e., a narrow investment of g based on a lopsided combination of opportunity and proclivity - might put it beyond the reach of g-loaded tests weak in those specific factors, leading to deceptive results?

Arthur Jensen: I don’t take anecdotal report of the IQs of famous persons at all seriously. They are often fictitious and are used to make a point - typically a put-down of IQ test and the whole idea that individual differences in intelligence can be ranked or measured. James Watson once claimed an IQ of 115; the daughter of another very famous Nobelist claimed that her father would absolutely “flunk” any IQ test. It’s all ridiculous.

Furthermore, the outstanding feature of any famous and accomplished person, especially a reputed genius, such as Feynman, is never their level of g (or their IQ), but some special talent and some other traits (e.g., zeal, persistence). Outstanding achievements(s) depend on these other qualities besides high intelligence. The special talents, such as mathematical musical, artistic, literary, or any other of the various “multiple intelligences” that have been mentioned by Howard Gardner and others are more salient in the achievements of geniuses than is their typically high level of g. Most very high-IQ people, of course, are not recognized as geniuses, because they haven’t any very outstanding creative achievements to their credit. However, there is a threshold property of IQ, or g, below which few if any individuals are even able to develop high-level complex talents or become known for socially significant intellectual or artistic achievements. This bare minimum threshold is probably somewhere between about +1.5 sigma and +2 sigma from the population mean on highly g-loaded tests.

Childhood IQs that are at least above this threshold can also be misleading. There are two famous scientific geniuses, both Nobelists in physics, whose childhood IQs are very well authenticated to have been in the mid-130s. They are on record and were tested by none other than Lewis Terman himself, in his search for subjects in his well-known study of gifted children with IQs of 140 or above on the Stanford-Binet intelligence test. Although these two boys were brought to Terman’s attention because they were mathematical prodigies, they failed by a few IQ points to meet the one and only criterion (IQ > 139) for inclusion in Terman’s study. Although Terman was impressed by them, as a good scientist he had to exclude them from his sample of high-IQ kids. Yet none of the 1,500+ subjects in the study ever won a Nobel Prize or has a biography in the Encyclopedia Britannica as these two fellows did. Not only were they gifted mathematically, they had a combination of other traits without which they probably would not have become generally recognized as scientific and inventive geniuses. So-called intelligence tests, or IQ, are not intended to assess these special abilities unrelated to IQ or any other traits involved in outstanding achievement. It would be undesirable for IQ tests to attempt to do so, as it would be undesirable for a clinical thermometer to measure not just temperature but some combination of temperature, blood count, metabolic rate, etc. A good IQ test attempts to estimate the g factor, which isn’t a mixture, but a distillate of the one factor (i.e., a unitary source of individual differences variance) that is common to all cognitive tests, however diverse.

I have had personal encounters with three Nobelists in science, including Feynman, who attended a lecture I gave at Cal Tech and later discussed it with me. He, like the other two Nobelists I’ve known (Francis Crick and William Shockley), not only came across as extremely sharp, especially in mathematical reasoning, but they were also rather obsessive about making sure they thoroughly understood the topic under immediate discussion. They at times transformed my verbal statements into graphical or mathematical forms and relationships. Two of these men knew each other very well and often discussed problems with each other. Each thought the other was very smart. I got a chance to test one of these Nobelists with Terman’s Concept Mastery Test, which was developed to test the Terman gifted group as adults, and he obtained an exceptionally high score even compared to the Terman group all with IQ > 139 and a mean of 152.

I have written an essay relevant to this whole question: “Giftedness and genius: Crucial differences.” In C. P. Benbow & D. Lubinski (Eds.) Intellectual Talent: Psychometric and Social Issues, pp. 393-411. Baltimore: Johns Hopkins University Press.See here for data relevant to this topic and the discussion in the comments.

Theory and experiment

Chapter 4: I learned about the discovery of nuclear fission in the Berkeley campus barbershop one morning in late January 1939, while my hair was being cut. Buried on an inside page of the Chronicle was a story from Washington reporting Bohr's announcement that German chemists had split the uranium atom by bombarding it with neutrons. I stopped the barber in mid-snip and ran all the way to the Rad Lab to spread the word. The first person I saw was my graduate student Phil Abelson. I knew the news would shock him. "I have something terribly important to tell you," I said. "I think you should lie down on the table." Phil sensed my seriousness and complied. I told him what I had read. He was stunned; he realized immediately, as I had before, that he was within days of making the same discovery himself.

... I tracked down Oppenheimer working with his entourage in his bullpen in LeConte Hall. He instantly pronounced the reaction impossible and proceeded to prove mathematically to everyone in the room that someone must have made a mistake. The next day Ken Green and I demonstrated the reaction. I invited Robert over to see [it] ... In less than 15 minutes he not only agreed that the reaction was authentic but also speculated that in the process extra neutrons would boil off that could be used to split more uranium atoms and thereby generate power or make bombs. It was amazing to see how rapidly his mind worked, and he came to the right conclusions. His response demonstrated the scientific ethic at its best. When we proved that his previous position was untenable, he accepted the evidence with good grace, and without looking back he immediately turned to examining where the new knowledge might lead.This short passage illustrates many aspects of science: the role of luck, the convergence of different avenues of investigation, the overconfidence of theorists and the supremacy of experiments in discerning reality, the startling reach of a powerful mind.

Tuesday, May 01, 2012

Risk taking and innovation: lawyers and art history majors

NYTimes ... In a competitive market, all that’s left are the truly hard puzzles. And they require extraordinary resources. While we often hear about the greatest successes — penicillin, the iPhone — we rarely hear about the countless failures and the people and companies who financed them.

A central problem with the U.S. economy, he told me, is finding a way to get more people to look for solutions despite these terrible odds of success. Conard’s solution is simple. Society benefits if the successful risk takers get a lot of money. For proof, he looks to the market. At a nearby table we saw three young people with plaid shirts and floppy hair. For all we know, they may have been plotting the next generation’s Twitter, but Conard felt sure they were merely lounging on the sidelines. “What are they doing, sitting here, having a coffee at 2:30?” he asked. “I’m sure those guys are college-educated.” Conard, who occasionally flashed a mean streak during our talks, started calling the group “art-history majors,” his derisive term for pretty much anyone who was lucky enough to be born with the talent and opportunity to join the risk-taking, innovation-hunting mechanism but who chose instead a less competitive life. In Conard’s mind, this includes, surprisingly, people like lawyers, who opt for stable professions that don’t maximize their wealth-creating potential. He said the only way to persuade these “art-history majors” to join the fiercely competitive economic mechanism is to tempt them with extraordinary payoffs.